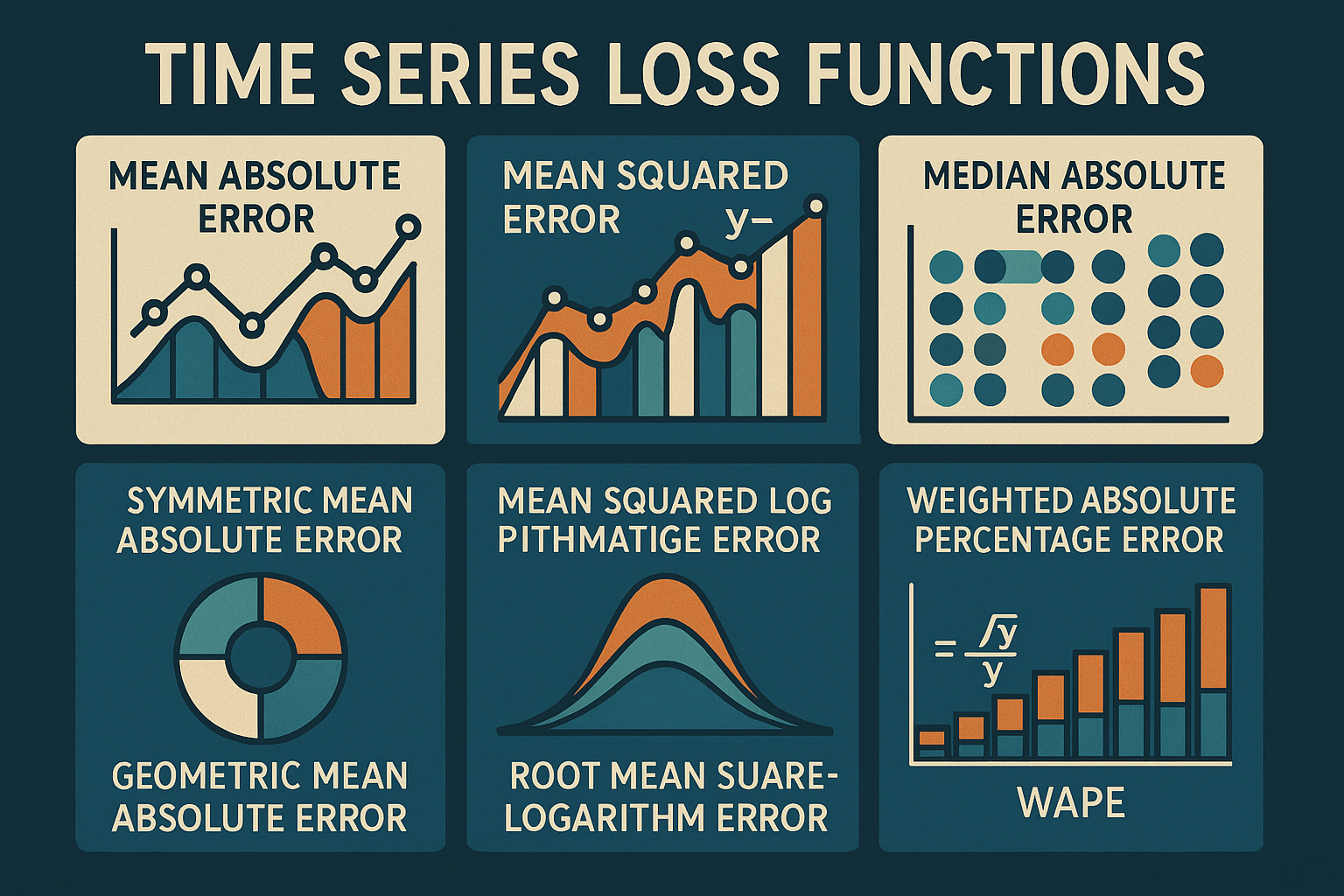

Loss Function for Time Series Prediction

Loss functions play a crucial role in determining the performance of time series regression models in real-world scenarios. In any machine learning task, there’s always a trade-off between over- and under-prediction. The choice of loss function determines whether the model will symmetrically or asymmetrically penalize it for either under- or over-prediction. In some cases, it’s imperative to avoid either under- or over-prediction. For instance, when a stock of inventory is mission-critical, and the holding cost is relatively insignificant compared to the stock-out cost, the model must be penalized more for under-prediction than over-prediction. This article delves into the various loss functions.

Absolute Error

Absolute error is a class of loss functions that measures the difference between the predicted and actual values. This error function is scale-dependent, meaning its magnitude depends on the actual value being predicted. Consequently, it is unit-sensitive. For instance, a model trained to predict in kilometers will have significantly lower error rates compared to a model predicting in millimeters.

The absolute error class of loss function typically calculates the absolute value of the difference between the actual and predicted values. Each loss function in this class aggregates the absolute difference in a specific manner. The absolute error class of loss function treats errors equally and applies a linear penalty to all deviations, irrespective of their magnitude. The most common ones are discussed in the following subsections.

A. Mean Absolute Error

\[\text{MAE} = \frac{1}{n} \sum_{i=1}^n | \text{actual}_i - \text{pred}_i |\]

The Mean Absolute Error (MAE) calculates the average of the absolute differences between actual and predicted values. While this makes the MAE simple and interpretable, it also makes it more sensitive to outliers, as it does not disproportionately penalize larger errors.

B. Median Absolute Error

\[\text{MAD} = \text{median}(|\text{actual}_i - \text{pred}_i|)\]

The Median Absolute Deviation (MAD) calculates the average absolute difference by taking the median instead of the mean. This modification makes the loss function more resilient to outliers.

C. Geometric Mean Absolute Error

\[\text{GMAE} = \left( \prod_{i=1}^n | \text{actual}_i - \text{pred}_i | \right)^{\frac{1}{n}}\]

The Geometric Mean Absolute Error (GMAE) calculates the central tendency of absolute differences between actual and predicted values by taking the product of these differences and raising the result to the power of 1/n, where n is the number of samples. This loss function is particularly suitable for problems where errors should be measured in a multiplicative rather than additive manner, such as when modeling processes that inherently exhibit exponential growth or decay.

However, GMAE has a critical limitation: if any of the absolute differences are zero, the entire product becomes zero, making the error measurement meaningless. This sensitivity to zero errors can pose a significant challenge, especially in datasets where perfect predictions (i.e., zero errors) occur.

D. Weighted Mean Absolute Error

\[\text{WMAE} = \frac{\sum_{i=1}^n w_i \cdot |\text{actual}_i - \text{pred}i|}{\sum{i=1}^n w_i}\]

The Weighted Mean Absolute Error (WMAE) functions similarly to the Mean Absolute Error (MAE) but introduces the flexibility of assigning weights to different observations. This allows certain errors to be emphasized more than others, making WMAE especially useful when some types of errors need to be penalized more heavily. For instance, in forecasting applications, over-forecasting errors can be assigned higher weights than under-forecasting errors, allowing the model to align better with business or operational objectives. This flexibility makes WMAE a powerful tool for tailoring the loss function to specific needs and desired outcomes.

Squared Error

Squared error is a type of loss function that calculates the square of the difference between the predicted and actual values. The purpose of this function is to prevent the cancellation out of over- and under-predictions. Additionally, it heavily penalizes the model as it deviates further from the true value. Furthermore, this class of loss function is scale-dependent and is significantly influenced by the unit of measurement used in the problem.

The squared error class of loss function calculates the squared difference between the predicted and actual values. The difference in squared differences is how these squared differences are aggregated in the difference-based loss function. Unlike the absolute error, the squared error penalizes the model more the farther the prediction is from the actual value. The most common loss functions are discussed in the following subsections.

A. Mean Squared Error

\[\text{MSE} = \frac{1}{n} \sum_{i=1}^n (\text{actual}_i - \text{pred}_i)^2\]

The Mean Squared Error (MSE) calculates the average of the squared differences between the actual and predicted values. Squaring the error amplifies its sensitivity to outliers, while squaring the error rate complicates its interpretation.

B. Root Mean Squared Error

\[\text{RMSE} = \sqrt{\frac{1}{n} \sum_{i=1}^n (\text{actual}_i -\text{pred}_i)^2}\]

The Root Mean Squared Error (RMSE) is calculated by taking the square root of the Mean Squared Error (MSE). This approach serves two purposes: it mitigates the influence of outliers and enhances interpretability.

C. Root Median Squared Error

\[\text{RMdSE} = \sqrt{\text{median}\left((\text{actual}_i - \text{pred}_i)^2\right)}\]

The Root Median Squared Error (RMdSE) is a variation of the RMSE that uses the median of the squared errors instead of the mean. By taking the median, RMdSE becomes significantly less sensitive to outliers. While RMSE can be heavily influenced by a few large errors, RMdSE provides a more robust measure of typical model performance, making it ideal in situations where the data contains extreme values or outliers. This makes RMdSE a better choice when you want a more representative measure of the error that does not overemphasize large deviations.

D. Geometric Root Mean Squared Error

\[\text{GRMSE} = \sqrt{\left( \prod_{i=1}^n (\text{actual}_i - \text{pred}_i)^2 \right)^{\frac{1}{n}}}\]

The Geometric Root Mean Squared Error (GRMSE) computes the geometric mean of the squared differences between actual and predicted values, followed by taking the square root. This metric is particularly useful for data with multiplicative relationships or values that vary across multiple scales. GRMSE reduces the influence of outliers compared to traditional RMSE but can become unreliable if any of the squared errors are zero, as this results in an overall error of zero. While it provides a more balanced error measure in some cases, its complexity and sensitivity to zero values make it less suitable for all datasets. GRMSE is ideal when the magnitude and proportionality of errors are crucial considerations.

E. Asymmetric Mean Squared Error

\[\text{AMSE} = \displaystyle\frac{1}{n} \sum_{i=1}^n \left\{\begin{array}{ll}\alpha (\text{actual}_i - \text{pred}_i)^2 & \text{if } \text{pred}_i < \text{actual}_i \\(1 - \alpha) (\text{actual}_i - \text{pred}_i)^2 & \text{if } \text{pred}_i \geq \text{actual}_i\end{array}\right.\]

The Asymmetric Mean Squared Error (AMSE) is a statistical measure that modifies the traditional Mean Squared Error by introducing different penalties for over-predictions and under-predictions. This is accomplished through the application of a weighting factor, which empowers users to prioritize one type of error over the other. For instance, if over-predictions are more costly, the model can be adjusted to penalize them more severely, while the opposite is true for under-predictions. AMSE finds significant practical applications in real-world scenarios where the costs of overestimating and underestimating differ substantially, such as in demand forecasting and financial risk modeling. Nevertheless, careful selection of the weighting factor is essential to ensure that the model aligns effectively with the specific business or operational objectives.

Percent Error

Percentage Error is a class of loss function that computes the error and scales it against the true value. This makes the error rate scale-independent because it’s a percentage of the true value. Consequently, this loss function is less susceptible to the unit of measure used.

While the percentage error class of loss function addresses the scale-dependence issue of absolute and squared error classes, it introduces new challenges. Notably, the loss function itself lacks symmetry. Underprediction is less penalized compared to overprediction because the denominator, representing the actual value, is typically higher than the numerator, representing the error. As illustrated in the figure above, this characteristic naturally inclines the model toward overprediction. The most common loss functions are discussed in the following subsections.

A. Mean Absolute Percentage Error

\[\text{MAPE} = \frac{1}{n} \sum_{i=1}^n \left| \frac{\text{actual}_i - \text{pred}_i}{\text{actual}_i} \right| \times 100\]

The Mean Absolute Percentage Error (MAPE) is a widely used metric for assessing the accuracy of predictive models, especially in time series forecasting. It quantifies the average percentage deviation between actual and predicted values, making it intuitive and easy to comprehend. The MAPE formula computes the absolute difference between each actual and predicted value, divides it by the actual value, and then computes the average of these percentages. One advantage of MAPE is that it presents errors as percentages, facilitating comparisons of model performance across diverse datasets. However, MAPE faces certain limitations. It becomes unreliable when actual values are close to zero, and it tends to overemphasize errors for smaller values. Consequently, caution should be exercised when applying MAPE in scenarios where the data contains values near zero.

B. Median Absolute Percentage error

\[\text{MdAPE} = \text{median}\left( \left| \frac{\text{actual}_i - \text{pred}_i}{\text{actual}_i} \right| \times 100 \right)\]

The Median Absolute Percentage Error (MdAPE) is a robust metric used to assess the accuracy of predictive models. It calculates the median of the absolute percentage errors between actual and predicted values. Unlike the Mean Absolute Percentage Error (MAPE), which takes the average, MdAPE employs the median, making it less susceptible to extreme outliers and more reliable when dealing with datasets containing occasional large errors. This property makes MdAPE particularly useful in situations where the data distribution is skewed or when outliers could disproportionately influence the error measurement. However, like MAPE, MdAPE can still pose challenges when actual values are close to zero, as percentage errors can become substantial or undefined. Overall, MdAPE offers a more stable and robust error metric in scenarios where minimizing the impact of outliers is paramount.

C. Weighted Absolute Percentage Error

\[\text{WAPE} = \frac{\sum_{i=1}^n w_i \left| \text{actual}_i - \text{pred}i \right|}{\sum{i=1}^n w_i \times \text{actual}_i} \times 100\]

The Weighted Absolute Percentage Error (WAPE) is a metric used to evaluate the accuracy of predictive models. Unlike the standard Mean Absolute Percentage Error (MAPE), WAPE takes into account the varying significance of each observation. This makes it particularly useful in scenarios where certain observations should contribute more to the overall error calculation. WAPE is advantageous when working with datasets where the impact of errors should be proportional to the size or importance of the data points. However, like other percentage-based metrics, WAPE can be problematic when actual values are close to zero. This can inflate the error values or lead to instability in the metric.

Symmetric Error

Symmetric Error are class of loss function that tries to remedy the two main issues of the percentage error namely, that it is asymmetric and that it is not continuous at the point where actual = 0.

The symmetric error attempts to address the asymmetry of the percentage error while preserving the advantage of being scale-independent. From the initial perspective presented earlier, it appears that the symmetric error class of functions has successfully resolved the issue.

However, upon closer examination, the symmetric error is not actually symmetrical. The illustration above demonstrates how the function behaves with over and under predictions, given a fixed actual value of 100. This differs from the previous illustration that varied both the actual and predicted values. It’s evident in the figure above that the symmetric error favors overprediction, which is the complete opposite of what the percentage error loss function aims to achieve. The most common loss functions are discussed in the subsequent subsections.

A. Symmetric Mean Absolute Percentage Error

\[\text{sMAPE} = \frac{100}{n} \sum_{t=1}^n \frac{|\text{F}_t - \text{A}_t|}{(|\text{A}_t| + |\text{F}_t|) / 2}\]

The Symmetric Mean Absolute Percentage Error (sMAPE) is a widely used metric for evaluating the accuracy of forecasting models. It measures the percentage difference between forecasted and actual values, treating both over-predictions and under-predictions equally. Unlike traditional MAPE, sMAPE normalizes the error by the average of the absolute actual and forecasted values. This normalization reduces the impact of very large or small values, making it a balanced and scale-independent metric. However, sMAPE can still be sensitive to values near zero.

B. Symmetric Median Absolute Percentage Error

\[\text{sMdAPE} = \text{median} \left( \frac{200 \times |\text{actual}_t - \text{pred}_t|}{|\text{actual}_t| + |\text{pred}_t|} \right)\]

The Symmetric Median Absolute Percentage Error (sMdAPE) is a robust error metric that measures the percentage difference between forecasted and actual values. Unlike the mean, it uses the median to reduce the impact of outliers. By normalizing the absolute error by the average of the absolute actual and forecasted values, sMdAPE ensures a balanced treatment of over-predictions and under-predictions. This makes it a more stable and reliable measure of forecasting accuracy, especially in datasets with extreme values or skewed distributions. Therefore, sMdAPE is an ideal choice when a more robust and outlier-resistant error metric is required.

Summary

Loss functions significantly impact the model’s performance in real-world scenarios. These functions can be categorized based on specific metrics as follows:

- Scale-Free: The loss function remains unaffected by the scale of the input data.

- Continuous: The loss function does not exhibit gaps or discontinuities due to potential issues with invalid inputs, such as dividing by zero.

- Symmetric: The loss function does not favor either over- or under-predictions.

- Favors Over(Under) Prediction: The loss function, if not symmetric, favors certain outcomes.

- Outlier Robust: The loss function can handle outliers without significantly affecting the results.

| Loss Function | Scale-Free | Continuous | Bias | Outliers Robust |

|---|---|---|---|---|

| Mean Absolute Deviation | No | Yes | Equal | No |

| Median Absolute Error | No | Yes | Equal | Yes |

| Geometric Mean Absolute Error | No | No | Equal | No |

| Weighted Mean Absolute Error | No | Yes | Custom | No |

| Mean Squared Error | No | Yes | Equal | No |

| Root Mean Squared Error | No | Yes | Equal | No |

| Root Median Squared Error | No | Yes | Equal | Yes |

| Geometric Root Mean Squared Error | No | No | Equal | No |

| Asymmetric Mean Squared Error | No | Yes | Custom | No |

| Mean Absolute Percentage Error | Yes | No | Under | No |

| Median Absolute Percentage Error | Yes | No | Under | Yes |

| Weighted Absolute Percentage Error | Yes | No | Custom | No |

| Symmetric Mean Absolute Percentage Error | Yes | No | Over | No |

| Symmetric Median Absolute Percentage Error | Yes | No | Over | Yes |

References: